Background

I have a plan to setup LogStash, ElasticSearch and Kibana to monitor and analyze log files from production tomcat servers. It was a smooth start to install and play around all these software. But then I was stuck for almost two days to figure out a way to parse the information from the log files. After tons of experiments, I finally understand them better and figured out how to analyze the log files from Tomcat.

Lessons learned

--even the popular open source products, their documents are still not complete and somewhat hard to understand, and many of solutions searched out are immature and useless.--copying other people's examples is a good way to get started, but productivity is from fully understanding how the thing works.

--when learning, you can have a look at other people's complex work, but you'd better to start simple and build it up knowledge gradually so that you had less chance to spend time on wrong directions.

Solutions

There are two types of log files I want to analyze, one is having format like this:

[2015-05-15 13:03:15,999] WARN [thread-1-1] some message 1111

part of first message

third row of first message

[2015-05-15 13:04:15,999] WARN [thread-1-2] some message 22222

Another has format like this:

May 25, 2015 2:26:22 AM com.sun.xml.ws.server.sei.TieHandler createResponse

SEVERE: PreparedStatementCallback; uncategorized SQLException for SQL [xxxx]; SQL state [72000]; error code [20000]; ORA-20000: Concurrency Failure

ORA-06512: at "xxxx", line 10

ORA-04088: error during execution of trigger xxxx'

....

May 25, 2015 2:26:40 AM javaclass method....

I decided to define two TCP port each type of log files so that processing can be logically separated.

This is the extra patterns I defined. They are put in a file called amaPatterns.

amaPatterns:

EVERYTHING (.*)

SSS (?:[\d]{3})

MYTIME %{YEAR}-%{MONTHNUM}-%{MONTHDAY} %{HOUR}:%{MINUTE}:%{SECOND},%{SSS}

CATALINA_DATESTAMP %{MONTH} %{MONTHDAY}, 20%{YEAR} %{HOUR}:?%{MINUTE}(?::?%{SECOND}) (?:AM|PM)

CATALINALOG %{CATALINA_DATESTAMP:logTime} %{JAVACLASS:class} %{NOTSPACE:methodName}\n%{LOGLEVEL:logLevel}: %{EVERYTHING:logMsg}

LogStash Configuration

This is the configure file for LogStash. It's defined in a file called first_config.cfg.first_config.cfg:

input {

#if elasticSearch has explicit name, then it should be used here

#I used stdin for testing purpose. real log files are fed to tcp port 666

#nc localhost 6666

tcp {

type => "catalina.out"

port => 6666

add_field => { "server" => "esb-java" fileSrc =>"cata.out" }

}

tcp {

type => "catalina.date"

port => 6667

add_field => { "server" => "esb-java" fileSrc =>"cata.date" }

}

#for debugging purpose

#when testing content from different tunnel, change type here correspondingly so you can

#use stdin as input for that branch of processing

# stdin {

# type => "catalina.date"

# }

#by default, every line is an event to logstash.

#multiline filter can combine lines/events into one event

#this definition means, for all the events that do not match given pattern,

#they should be merged to previous event

#in my case, a line start with [yyyy-mm-dd is a matching, all subsequent lines without matching will

#be merged into this matched line and dump out as one event

filter {

if [type] == "catalina.out" {

multiline {

patterns_dir => "/root/logstash-1.5.0/patterns"

pattern => "\[%{MYTIME}\]%{EVERYTHING}"

negate => true

what => "previous"

}

#this filter parse out logTime, logLevel and rest of content as logMsg

grok {

patterns_dir => "/root/logstash-1.5.0/patterns"

match => [ "message", "\[%{MYTIME:logTime}\] %{LOGLEVEL:logLevel} %{EVERYTHING:logMsg}" ]

}

#if "_grokparsefailure" in [tags] {

# grok {

# patterns_dir => "/root/logstash-1.5.0/patterns"

# match => [ "message", "%{CATALINALOG}" ]

# }

}

#filters are executed per sequence defined here

#last filter's output event will be next filter's input event--first input event is a line of data

#input event's fields can be further manipulated for untouched

#it's better to enable the filters one by one in order to make sure the up stream ones are

#working properly before moving to down stream ones

if [type] == "catalina.date" {

multiline {

patterns_dir => "/root/logstash-1.5.0/patterns"

pattern => "%{CATALINA_DATESTAMP}%{EVERYTHING}"

negate => true

what => "previous"

}

grok {

patterns_dir => "/root/logstash-1.5.0/patterns"

match => [ "message", "%{CATALINALOG}" ]

}

}

#for logTime, specifying its format here. Date filter will convert it to date type and

#assign this time as this event's event timestamp

#if you do not have this, event's timestamp will be the time the event is given to logStash

#by default, index name is ended with day of message's timestamp. so if the timestamp is mapped

#to past, an index named with past date will be created. Also, it does not matter how many input files

#you have, the index name will be only correlated to time stamps the event happened. e.g. one input #will end up with multiple indices suffixed with a date in the past.

date {

match => [ "logTime", "yyyy-MM-dd HH:mm:ss,SSS", "yyyy-MM-dd HH:mm:ss,SSS Z", "MMM dd, yyyy HH:mm:ss a" ]

}

}

output {

if [fileSrc] == "cata.out" {

elasticsearch {

host => localhost

cluster=>emaES

index => "logstash-cata-out-%{+YYYY.MM.dd}"

}

}

if [fileSrc] == "cata.date" {

elasticsearch {

host => localhost

cluster=>emaES

index => "logstash-cata-date-%{+YYYY.MM.dd}"

}

}

# stdout { codec => rubydebug }

}

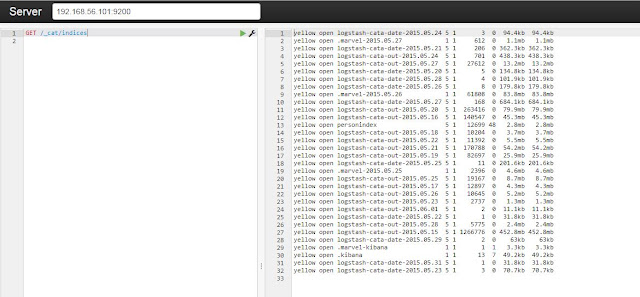

Sample Output

Sample output in the stdout after it works. This example is for type of catalina.out. First four lines are inputs. Please notice the @timestamp has been changed to time in the log rows.

[2015-05-15 13:03:15,999] WARN [thread-1-1] some message 1111

part of first message

third row of first message

[2015-05-15 13:04:15,999] WARN [thread-1-2] some message 22222

{

"message" => "[2015-05-15 13:03:15,999] WARN [thread-1-1] some message 1111\npart of first message\nthird row of first message",

"@version" => "1",

"@timestamp" => "2015-05-15T20:03:15.999Z",

"host" => "hadoop1.hadooptest",

"tags" => [

[0] "multiline"

],

"logTime" => "2015-05-15 13:03:15,999",

"logLevel" => "WARN",

"logMsg" => " [thread-1-1] some message 1111\npart of first message\nthird row of first message"

}

{

"message" => "[2015-05-15 13:04:15,999] WARN [thread-1-2] some message 22222",

"@version" => "1",

"@timestamp" => "2015-05-15T20:04:15.999Z",

"host" => "hadoop1.hadooptest",

"logTime" => "2015-05-15 13:04:15,999",

"logLevel" => "WARN",

"logMsg" => " [thread-1-2] some message 22222"

}

Loading log files to ElasticSearch through LogStash

For catalina.date files, do this

nc localhost 6667

For catalina.out, do this

nc localhost 6667

1 comment:

Very nice article

Post a Comment